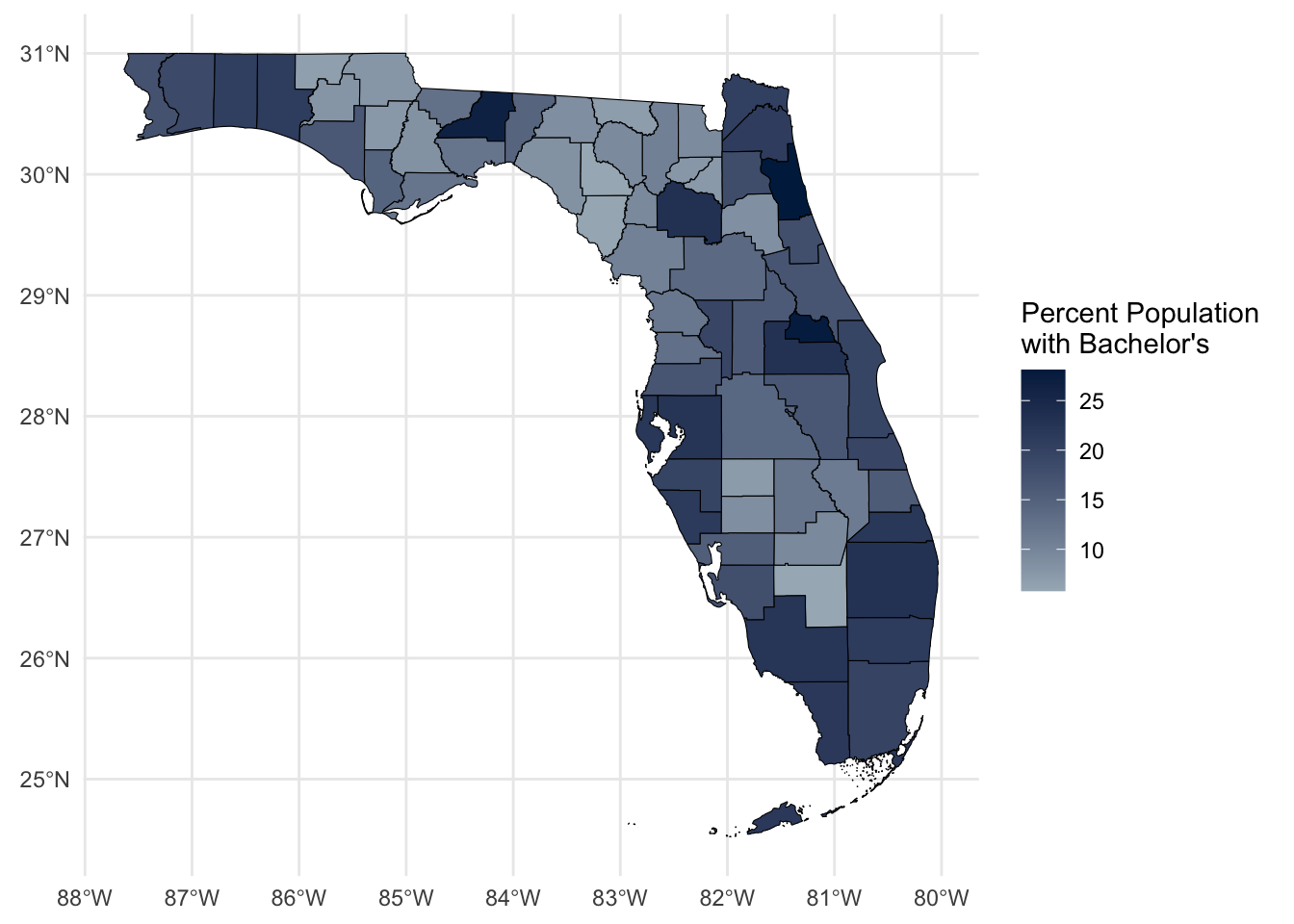

Coordinate Reference System:

User input: EPSG:4326

wkt:

GEOGCRS["WGS 84",

ENSEMBLE["World Geodetic System 1984 ensemble",

MEMBER["World Geodetic System 1984 (Transit)"],

MEMBER["World Geodetic System 1984 (G730)"],

MEMBER["World Geodetic System 1984 (G873)"],

MEMBER["World Geodetic System 1984 (G1150)"],

MEMBER["World Geodetic System 1984 (G1674)"],

MEMBER["World Geodetic System 1984 (G1762)"],

MEMBER["World Geodetic System 1984 (G2139)"],

MEMBER["World Geodetic System 1984 (G2296)"],

ELLIPSOID["WGS 84",6378137,298.257223563,

LENGTHUNIT["metre",1]],

ENSEMBLEACCURACY[2.0]],

PRIMEM["Greenwich",0,

ANGLEUNIT["degree",0.0174532925199433]],

CS[ellipsoidal,2],

AXIS["geodetic latitude (Lat)",north,

ORDER[1],

ANGLEUNIT["degree",0.0174532925199433]],

AXIS["geodetic longitude (Lon)",east,

ORDER[2],

ANGLEUNIT["degree",0.0174532925199433]],

USAGE[

SCOPE["Horizontal component of 3D system."],

AREA["World."],

BBOX[-90,-180,90,180]],

ID["EPSG",4326]]