install.packages(c("tidymodels", "estimatr", "stargazer", "gtsummary"))Bringing It All Together (Feat. Basic Models)

- We are going to use (the tiniest portion of) our final new package today

tidymodels, as well asestimatrto fit a different kind of regression, and a couple of packages to make pretty regression output tables

- First off, this is not a stats lesson

- Some of you have taken years of stats classes, while others are yet to take any.

- The purpose of this lesson is meant to focus how everything we have learned this semester comes together when we begin to work with some simple models

- That said, it may still be a lot of information for those less familiar with stats.

- Fear not, there is no expectation to use of any this in your final assignment

- If this lesson starts to go beyond your comfort level, that’s completely fine! Try your best to follow along, and maybe revisit this lesson once you’re a little further along your stats journey

Data Preparation

- Before we can run any kind of models, we need to make sure our data is prepared

- This involves using skills from our data wrangling lessons such as

- Data Wrangling I

- Handling missing data

- Making sure our data is the right format (

numeric,factor,character, etc.) - Performing basic calculations (e.g., percentages, differences, etc.)

- Data Wrangling II

- Joining multiple data sets together

- Pivoting data wider and/or longer

- Data Wrangling III

- Cleaning up text data

- Transforming dates into

- Data Wrangling IV

- Performing any of the above tasks

across()multiple columns coalesce()-ing multiple columns into one variable

- Performing any of the above tasks

- For the purpose of today’s lesson, we are going to focus on two of these tasks, dealing with missing data, and making sure our data is in the right format

Handling Missing Data

When modeling, by default, R will simply drop any rows that have an

NAin any variable you are modeling on (this is a little different to the cautious R we ran into in Data Wrangling I)In real world applications, you need to think carefully about how you handle these…

Should I impute the missing data? If so, using what method?

Should I use this variable at all if it’s missing for a bunch of observations?

For this lesson, however, we are just going to drop

NAvalues so we can focus on the main contentThe below code uses the combines the logic we use for making

NAs in Data Wrangling I with the ability to work across multiple columns in Data Wrangling IVFirst, we read our data and

select()the columns we want to use

data <- read_csv("data/hsls-small.csv") |>

select(stu_id, x1sex, x1race, x1txmtscor, x1paredu, x1ses, x1poverty185, x1paredexpct)- Second we use a combination of

!,filter(),andif_any()to say… - “If a row…”

- “has a -8 or -9”

.fns = ~ . %in% c(-8, -9)

- “in any columns”

.cols = everything()

- “do NOT keep it”

filter(!)

- “has a -8 or -9”

data <- data |>

filter(! if_any(.cols = everything(),

.fns = ~ . %in% c(-8, -9)))Making Sure Our Data is the Right Format

- In our Data Viz I and Data Viz II lessons, we saw that for R to accurately plot categorical variables, we had to convert them into

factor()s- The same is true for using categorical variables in models

- Those more familiar with stats may know that you have to “dummy code” categorical variables as 0 and 1 with one category serving as the “reference level” and all other categories getting their own binary variable

- The wonderful thing is that R handles that all for us if we tell it to treat the variable as a

factor()

- The below code combines the logic of turning variables into a

factor()from Data Viz I with working across multiple columns for Data Wrangling IV to say - “Modify”

mutate()

- “Each of these columns”

across(.cols = c(stu_id, x1sex, x1race, x1paredu, x1poverty185)

- “Into a factor”

.fns = ~ factor(.)

data <- data |>

mutate(across(.cols = c(stu_id, x1sex, x1race, x1paredu, x1poverty185),

.fns = ~ factor(.)))With that, our data is ready for some basic analysis!

Note: In most real-world projects your data preparation will be much more thorough, usually taking up the vast majority of the lines of code in your entire project, this is just the bare minimum to have the models run

t-tests with t.test()

- One of the first inferential statistical tests you will have learned (or will learn) is the t-test

- For those unfamiliar, the basic concept of a t-test if the difference in means between two groups (i.e., the difference between treatment and control) is greater than the variability within those groups (i.e., random variance between people within the same group)

- If the difference between the groups is great enough compared to the variation between the groups, the t-test will be “statistically significant”

- This means we are (most often) 95% confident that the there is a genuine difference between the groups, and the difference is unlikely to be due to random chance

- There are also a handful of statistical assumptions we have to satisfy, which are beyond our scope here, but hopefully the general concept will hope those of you yet to take your stats foundations follow along

- For those unfamiliar, the basic concept of a t-test if the difference in means between two groups (i.e., the difference between treatment and control) is greater than the variability within those groups (i.e., random variance between people within the same group)

- Let’s compare the math score between male and female.

t.test(x1txmtscor ~ x1sex, data = data)

Welch Two Sample t-test

data: x1txmtscor by x1sex

t = 0.38555, df = 16321, p-value = 0.6998

alternative hypothesis: true difference in means between group 1 and group 2 is not equal to 0

95 percent confidence interval:

-0.2455338 0.3657777

sample estimates:

mean in group 1 mean in group 2

52.14309 52.08297 - Luckily, the code for

t.test()is actually very simple (as is the case for regression too)- The first argument is a

forumla, which for a t-test is justoutcome ~ groupwhere group must only have 2 levels - In this case, we are looking at math score as our outcome and sex as our group - The second argument is

data =which we supply our prepared data frame- Note: the pipe

|>doesn’t play as nicely with models as it does other commands it’s usually easier to just specifydata =in a new line (don’t pipe anything in)

- Note: the pipe

- The first argument is a

- This code simply prints out our

t.test()result- As our p-value is above 0.05, our result is not significant - This indicates there is not a significant difference between male and female math scores in our sample

Regression with lm()

The problem with t-tests for our research, is that they don’t provide any ability to control for external variables

- They work great in experimental setting with random-treatment-assignment, but in the messy world of educational research, that’s rarely what we have

What we far more commonly use is a regression (or more advanced methods that build off regression) which allows use to control for other variables

The basic premise of regression very much builds off the logic of t-tests, testing if the variance associated with our treatment variable is great enough compared to a) residual/random variance and b) variance associated with our control variables, to say with confidence that there is a significant difference associated with our treatment

- Overall, this looks relatively similar to our code above, with three main differences

- We use

lm()(which stands for linear model) instead oft.test() - Instead of our formula just being

x1txmtscor ~ x1sexwe have added+ x1poverty185 + x1pareduto “control” for these variables - We assigned

<-ourlm()results to an object rather than just spitting them out

- That’s because the

summary()function is much more useful forlm()objects, plus, we are going to explore thelm()object more in the next steps

regression <- lm(x1txmtscor ~ x1sex + x1poverty185 + x1paredu, data = data)

summary(regression)

Call:

lm(formula = x1txmtscor ~ x1sex + x1poverty185 + x1paredu, data = data)

Residuals:

Min 1Q Median 3Q Max

-32.748 -5.715 0.160 6.136 30.413

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 49.0485 0.3379 145.139 < 2e-16 ***

x1sex2 -0.0412 0.1429 -0.288 0.773

x1poverty1851 -3.0224 0.1729 -17.483 < 2e-16 ***

x1paredu2 1.3419 0.3234 4.149 3.36e-05 ***

x1paredu3 2.4834 0.3584 6.929 4.38e-12 ***

x1paredu4 6.1227 0.3507 17.460 < 2e-16 ***

x1paredu5 8.1726 0.3814 21.426 < 2e-16 ***

x1paredu7 10.7669 0.4294 25.074 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 9.161 on 16421 degrees of freedom

Multiple R-squared: 0.1605, Adjusted R-squared: 0.1601

F-statistic: 448.4 on 7 and 16421 DF, p-value: < 2.2e-16- Our results show that, sex still had no significant association with math scores, but, our control variables of poverty and parental education seem to have some very strong associations

Quick Question

- You may notice we actually have more variables in the regression table than we put in, why? What do they represent?

- That’s correct, they represent the different levels of our

factor()-ed categorical variables

Creating Pretty Regression Output Tables

- Running regressions in R is all well and good, but the output you see here isn’t exactly “publication ready”

- There are multiple ways of creating regression (and other model) output tables, each with their own pros and cons

- Here, we will go over three of the most common methods

stargazer Package

- One of the most common packages for getting “publication ready” regression tables is

stargazer- The code is very simple, at minimum, provide the regression model you fitted, and the type of table you want

- We are using “html” here so it formats for the website, you could use “text” or “latex”

- In general, the biggest drawback of

stargazeris the lack of flexibility and limited compatibility with formats other thanLaTeX

- Extra trick: To get captions and table numbers if we are using Quarto, we use the “chunk options” to cross reference them, not manually adding them to the table code

- See Intro to Quarto lesson for a refresher on captioning

- To get the table to appear like this we…

- Labeled the chunk

{r tbl-stargazer} - Added

#| tbl-cap: "Regression Table Using stargazer"as the first line

- Labeled the chunk

stargazer(regression, type = "html")| Dependent variable: | |

| x1txmtscor | |

| x1sex2 | -0.041 |

| (0.143) | |

| x1poverty1851 | -3.022*** |

| (0.173) | |

| x1paredu2 | 1.342*** |

| (0.323) | |

| x1paredu3 | 2.483*** |

| (0.358) | |

| x1paredu4 | 6.123*** |

| (0.351) | |

| x1paredu5 | 8.173*** |

| (0.381) | |

| x1paredu7 | 10.767*** |

| (0.429) | |

| Constant | 49.049*** |

| (0.338) | |

| Observations | 16,429 |

| R2 | 0.160 |

| Adjusted R2 | 0.160 |

| Residual Std. Error | 9.161 (df = 16421) |

| F Statistic | 448.368*** (df = 7; 16421) |

| Note: | p<0.1; p<0.05; p<0.01 |

gtsummary Package

- The

gtsummarypackage (which we saw in our Intro to Quarto lesson) is another option for creating tablestbl_regression()creates a pretty great table when you just provide the regression model- One great thing is how

gtsummaryhandles factors—it makes the it super-duper clear what is going on

tbl_regression(regression)| Characteristic | Beta | 95% CI | p-value |

|---|---|---|---|

| x1sex | |||

| 1 | — | — | |

| 2 | -0.04 | -0.32, 0.24 | 0.8 |

| x1poverty185 | |||

| 0 | — | — | |

| 1 | -3.0 | -3.4, -2.7 | <0.001 |

| x1paredu | |||

| 1 | — | — | |

| 2 | 1.3 | 0.71, 2.0 | <0.001 |

| 3 | 2.5 | 1.8, 3.2 | <0.001 |

| 4 | 6.1 | 5.4, 6.8 | <0.001 |

| 5 | 8.2 | 7.4, 8.9 | <0.001 |

| 7 | 11 | 9.9, 12 | <0.001 |

| Abbreviation: CI = Confidence Interval | |||

- With a couple of extra lines to

- handle variable labels

label = list(x1sex ~ "Sex" ... )

- add significance stars (without hiding the p-value of confidence intervals)

add_significance_stars(hide_ci = FALSE, hide_p = FALSE)

- add model statistics

add_glance_source_note(include = c(r.squared, nobs))

- force the standard error column to show

modify_column_unhide(std.error)

- handle variable labels

- we can get something that looks pretty great

- Similarly to above, we add table numbers and captions using Quarto cross referencing

{r tbl-gtsummary}#| tbl-cap: "Regression Table Using gtsummary"

tbl_regression(regression,

label = list(x1sex ~ "Sex",

x1poverty185 ~ "Below Poverty Line",

x1paredu ~ "Parental Education")) |>

add_significance_stars(hide_ci = FALSE, hide_p = FALSE) |>

add_glance_source_note(include = c(r.squared, nobs)) |>

modify_column_unhide(std.error)| Characteristic | Beta1 | SE | 95% CI | p-value |

|---|---|---|---|---|

| Sex | ||||

| 1 | — | — | — | |

| 2 | -0.04 | 0.143 | -0.32, 0.24 | 0.8 |

| Below Poverty Line | ||||

| 0 | — | — | — | |

| 1 | -3.0*** | 0.173 | -3.4, -2.7 | <0.001 |

| Parental Education | ||||

| 1 | — | — | — | |

| 2 | 1.3*** | 0.323 | 0.71, 2.0 | <0.001 |

| 3 | 2.5*** | 0.358 | 1.8, 3.2 | <0.001 |

| 4 | 6.1*** | 0.351 | 5.4, 6.8 | <0.001 |

| 5 | 8.2*** | 0.381 | 7.4, 8.9 | <0.001 |

| 7 | 11*** | 0.429 | 9.9, 12 | <0.001 |

| Abbreviations: CI = Confidence Interval, SE = Standard Error | ||||

| R² = 0.160; No. Obs. = 16,429 | ||||

| 1 *p<0.05; **p<0.01; ***p<0.001 | ||||

- Reminder: We saw in Intro to Quarto how you can create matching descriptive statistics tables using the

tbl_summary()function- We also saw how you can force them to

as_kable()if needed for you output format - If you like

gtsummarytables and want to learn more, check out

- We also saw how you can force them to

“Homemade” Regression Tables with kable()

- While a little more work, we can also create our own table using the default

summary()andkable()like we saw in Intro to Quarto for descriptive tables- You might want to do this to specifically format a table in a way

gtsummarydoesn’t allow, or, to match some other tables you already created withkable

- You might want to do this to specifically format a table in a way

- First things first, let’s save the

summary()output to a new object

summary_object <- summary(regression)We will do this more below, but if you click on the object

summary_objectin the Environment (top right) you can see all the different pieces of information it holds- We are most interested in

coefficients, if you click on the right hand side of that row, you will see the codesummary_object[["coefficients"]]auto-populate

- Tip: This works for most objects like this

- We then turn that into as data frame with

as.data.frame() - Add a new column that contains the correct significance stars using

case_when() - Pipe all that into

kable()with updated column names and rounded numbers - Similarly to above, we add table numbers and captions using Quarto cross referencing

{r tbl-manual}#| tbl-cap: "Regression Table Using Kable"

- We are most interested in

summary_object[["coefficients"]] |>

as.data.frame() |>

mutate(sig = case_when(`Pr(>|t|)` < 0.001 ~ "***",

`Pr(>|t|)` < 0.01 ~ "**",

`Pr(>|t|)` < 0.05 ~ "*",

TRUE ~ "")) |>

kable(col.names = c("estimate", "s.e.", "t", "p", ""),

digits = 3)| estimate | s.e. | t | p | ||

|---|---|---|---|---|---|

| (Intercept) | 49.049 | 0.338 | 145.139 | 0.000 | *** |

| x1sex2 | -0.041 | 0.143 | -0.288 | 0.773 | |

| x1poverty1851 | -3.022 | 0.173 | -17.483 | 0.000 | *** |

| x1paredu2 | 1.342 | 0.323 | 4.149 | 0.000 | *** |

| x1paredu3 | 2.483 | 0.358 | 6.929 | 0.000 | *** |

| x1paredu4 | 6.123 | 0.351 | 17.460 | 0.000 | *** |

| x1paredu5 | 8.173 | 0.381 | 21.426 | 0.000 | *** |

| x1paredu7 | 10.767 | 0.429 | 25.074 | 0.000 | *** |

- There are other ways as well, but between these three options, you should be able to get what you want!

Predictions with lm()

When you fit a regression model in R, there is a lot more saved than you see with

summary()Since we have our

lm()object saved as regression, let’s start by taking a look inside it by clicking on the object in our environment (top right) panel - Confusing, right?- Most statistical models look something like this, it’s basically a collection of lists and tables containing different information about the model

There are functions such as

summary()that are great at pulling out the most commonly needed information without having to go manually digging through the model object, but sometimes, it can be useful to know it’s thereAnother great function is

predict()which extracts estimated values of the outcome variable based on the predictor variables (some other models usefitted()for the same purpose)For those more familiar with stats, you’ll know predicted values are often compared against the true values to see how strong the model is

- To start, let’s save a full set of predictions to a new columns in our data frame

data <- data |>

mutate(prediction = predict(regression))

data |> select(stu_id, x1txmtscor, prediction)# A tibble: 16,429 × 3

stu_id x1txmtscor prediction

<fct> <dbl> <dbl>

1 10001 59.4 57.2

2 10002 47.7 48.5

3 10003 64.2 59.8

4 10004 49.3 55.1

5 10005 62.6 55.2

6 10006 58.1 51.5

7 10007 49.5 50.3

8 10008 54.6 59.8

9 10009 53.2 50.4

10 10010 63.8 51.5

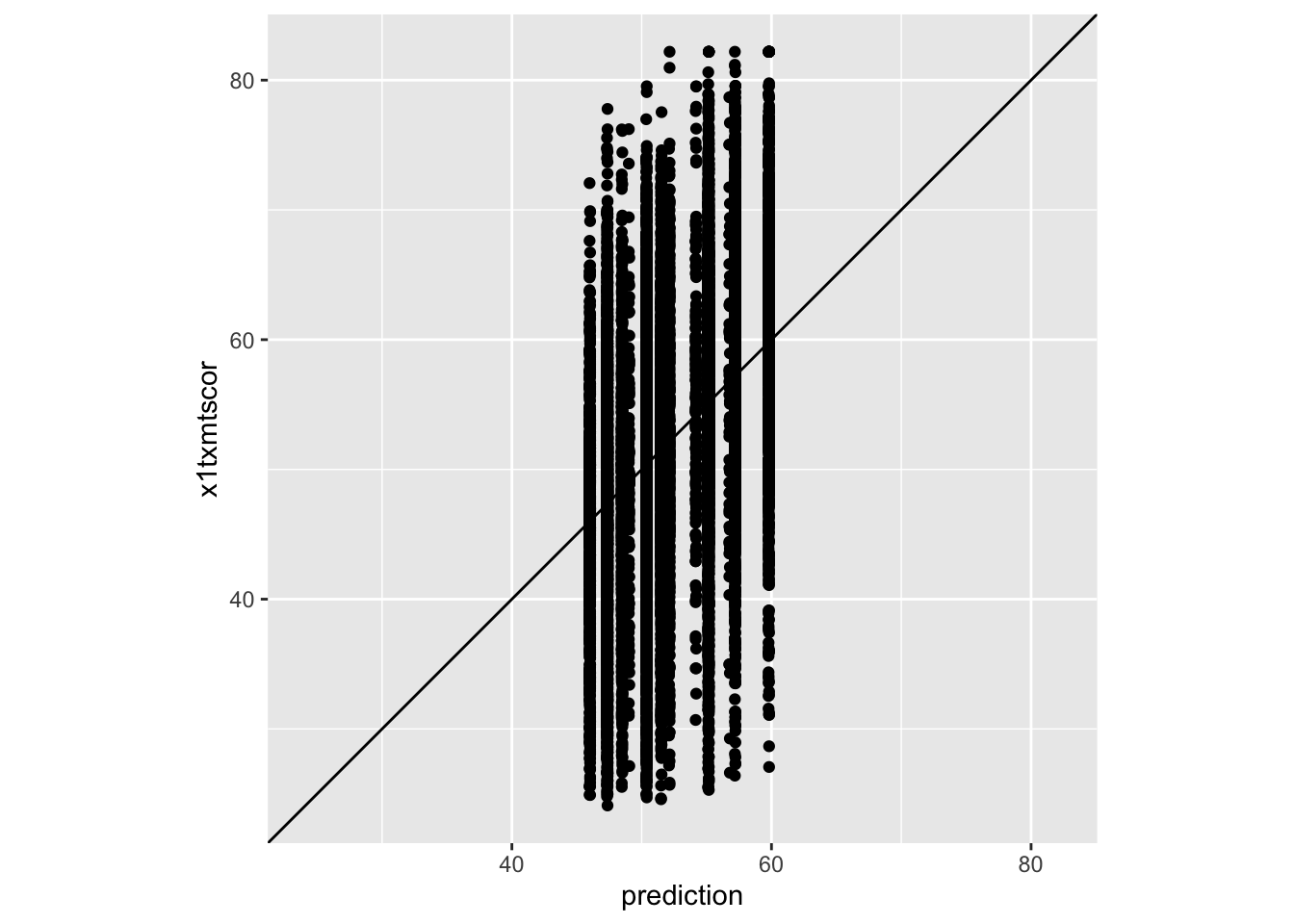

# ℹ 16,419 more rows- Next, we can compare these to our actual results using a simple plot (no formatting) from Data Viz I

- The only new things we add here is

geom_abline(slope = 1, intercept = 0)- This adds a reference line that represents a perfect 1 to 1 relationship (which would be if there was 0 prediction error)

coord_obs_pred()which is from thetidymodelspackage- This fixes the axes so that the predictions and observed values are plotted on the same scal

- The only new things we add here is

Quick Excercise

- Try removing the final line

coord_obs_pred()and see what happens. Which plot do you think is better?

ggplot(data,

aes(x = prediction,

y = x1txmtscor)) +

geom_point() +

geom_abline(slope = 1, intercept = 0) +

coord_obs_pred()

(Easier) Quick Question

- What do we think about our model? Does it look like it’s doing a great job of predicting? Why/why not?

(Harder) Quick Question

- You’ll notice our plot looks kind of clumped together, why do you think that it? What about the model would lead to that?

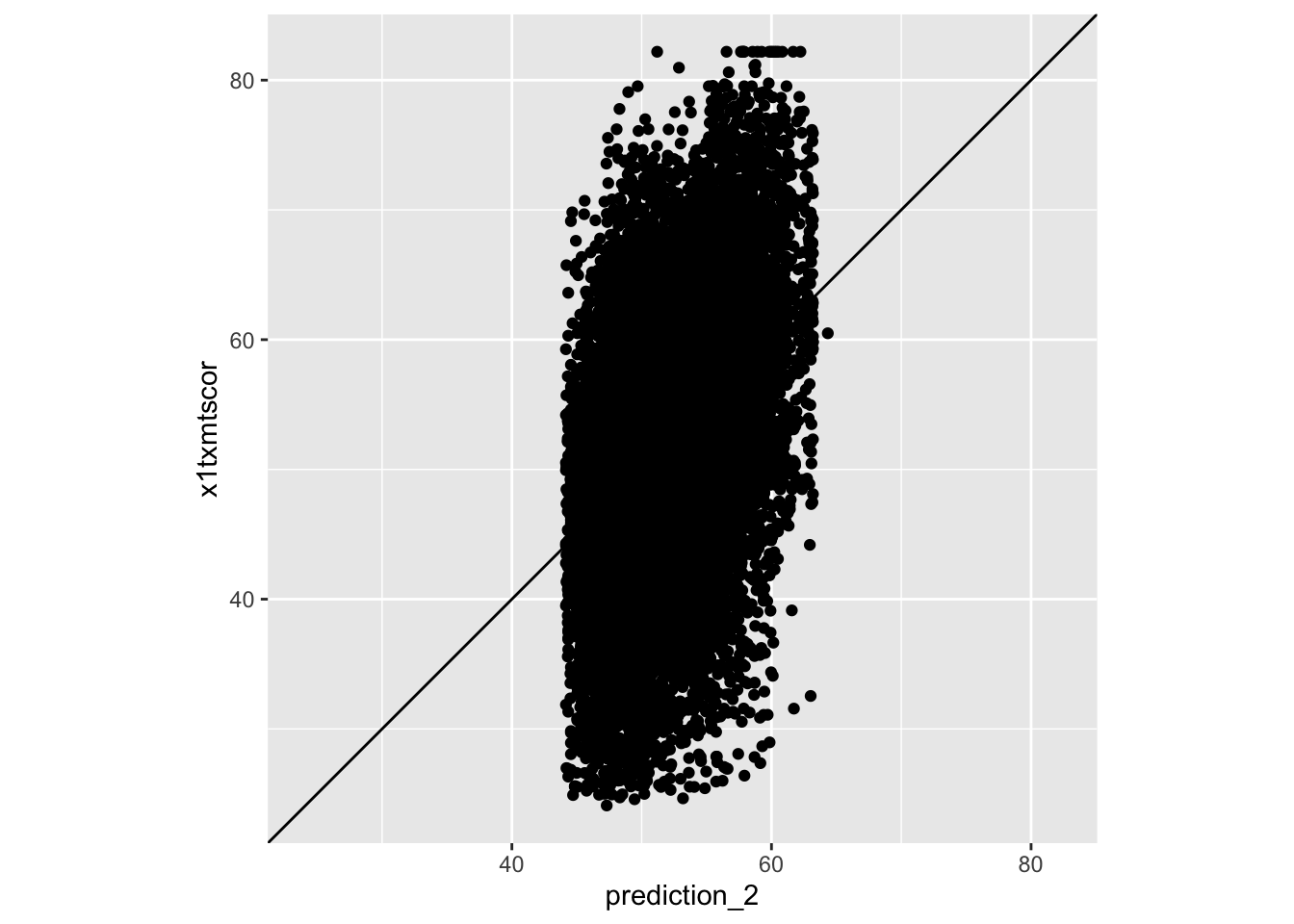

- Given what we just discussed, can we change one of the variables we are using in the model to make it less “clumpy” but caputre the same information?

regression_2 <- lm(x1txmtscor ~ x1sex + x1ses + x1paredu, data = data)

data <- data |>

mutate(prediction_2 = predict(regression_2))

ggplot(data,

aes(x = prediction_2,

y = x1txmtscor)) +

geom_point() +

geom_abline(slope = 1, intercept = 0) +

coord_obs_pred()

Quick Question

- Does that look better? What else is odd about our predictions?

- We can also use

predict()to estimate potential outcome values for new students who don’t have the outcome for - This is a common way you evaluate machine learning models

- If you think you’re model is a really good predictor (which ours is not) you may feel comfortable using something like this to help your office predict student outcomes/identify students in need of additional help

- To demonstrate this, we are first going to split out 10% of our data using

slice_sample()and drop the math score from it

data_outcome_unknown <- data |>

slice_sample(prop = 0.1) |>

select(-x1txmtscor)- Then, we can use

anti_join()which is basically the opposite of the joins we used in Data Wrangling II- It looks for every row in x that isn’t in y and keeps those

data_outcome_known <- anti_join(x = data, y = data_outcome_unknown, by = "stu_id")- Now, we can fit one more

lm()using our data we “know” the outcome for

regression_3 <- lm(x1txmtscor ~ x1sex + x1ses + x1paredu, data = data_outcome_known)- Finally, we can

predict()outcomes for the data we “don’t know” the outcome for- We add the

regression_3we just fitted as the model, same as before - But we also add

newdata = data_outcome_unknownto say predict the outcome for this new data, instead of extract the predictions the model originally made

- We add the

data_outcome_unknown <- data_outcome_unknown |>

mutate(prediction_3 = predict(regression_3, newdata = data_outcome_unknown))- Lastly, let’s see how similar our predictions we made using our model without the outcome were to those made when the outcome was known for everyone using

cor()to get the correlation

cor(data_outcome_unknown$prediction_2, data_outcome_unknown$prediction_3)[1] 0.9999789- Pretty close!

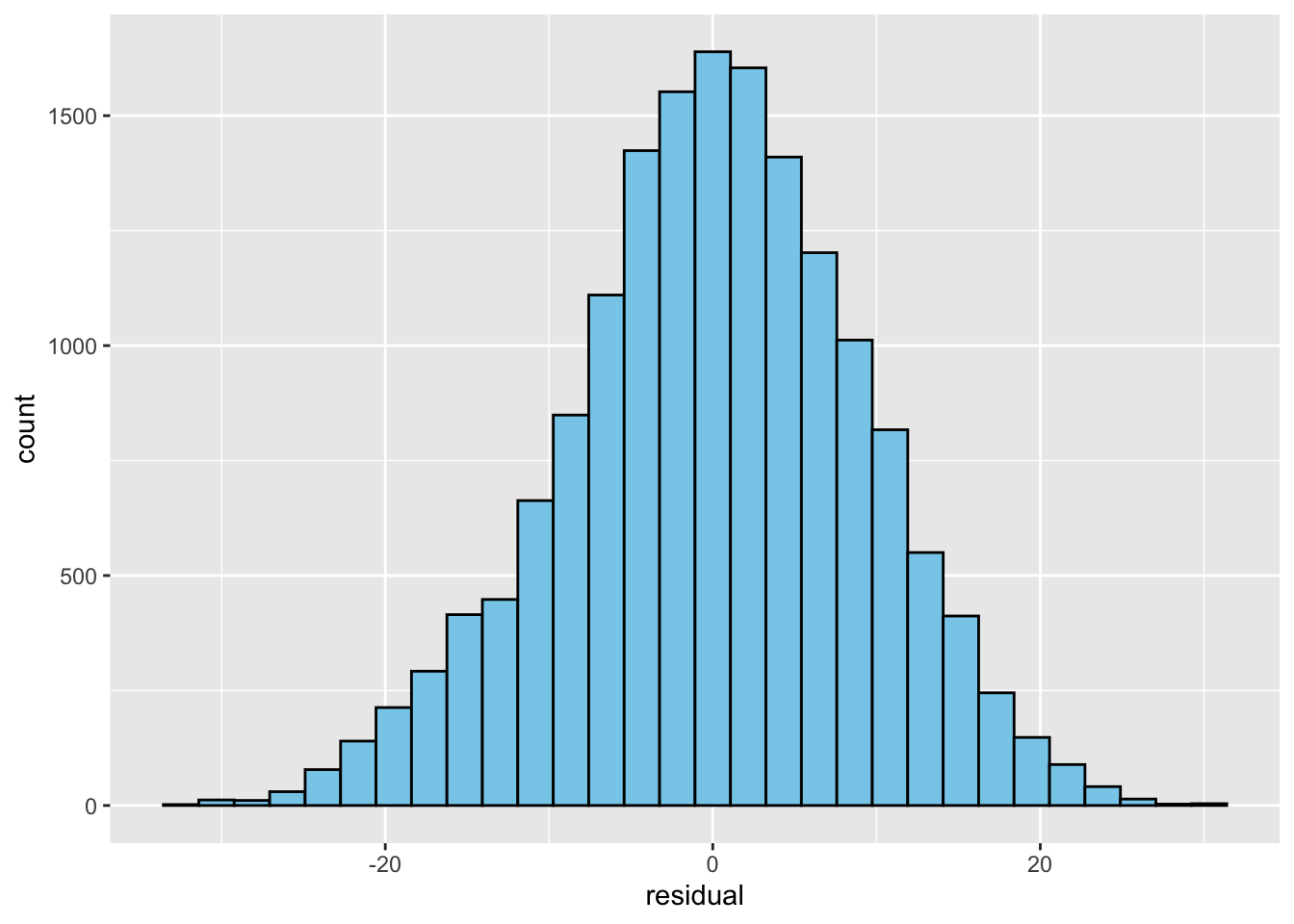

Checking Residuals

- Many of the assumptions relating to regression are tested by looking at the residuals

- We aren’t going to go over those assumptions, again, this is not a stats class

- But it might be useful to see how to get them out of a model object

- Let’s start by viewing the lm object again (environment, top right panel), then clicking on the little white box on the right hand side of the screen for the row “residuals” - That is a magic tip, if you ever want to get something specific out of a model object, often they’ll be something you can click on to generate the code needed to access it in the console

- For residuals, it is

regression_2[["residuals"]]

- For residuals, it is

data <- data |>

mutate(residual = regression_2[["residuals"]])- Now, again, not to get too deep into assumptions, but one of the key things to check is that your residuals have a normal distribution

- So let’s revisit some Data Visualization I content and make a simple

ggplot()histogram to of them

- So let’s revisit some Data Visualization I content and make a simple

ggplot(data) +

geom_histogram(aes(x = residual),

color = "black",

fill = "skyblue")`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

- Wow, that is almost a perfect normal distribution!

- Bonus points: can anyone remember/think of something about the variable

x1txmtscorthat made this result quite likely? Think about what kind of score it is

formula() Objects

- The second from last thing is really simple, but, it can be a time & error saver if you want to get more advanced like our final step

- Above, we simply put our formula into the

t.test()orlm()command- Instead, we can actually specify it as a formula object first, then call that object, which has two advantages

- If we run multiple tests with the same formula, we only have to change it once in our code for updates

- Here, we will run both standard

lm()andlm_robust()from theestimatrpackage

- If we want to run multiple tests in a loop like below, it makes that possible too

- To demonstrate this, we will fit the same model using standard

lm()andlm_robust()which for those versed in stats, is one option we can use when we have a violation of homoskedasticity (homogeneity of variances)

regression_formula <- formula(x1txmtscor ~ x1sex + x1ses + x1paredu)

regression_4 <- lm(regression_formula, data = data)

tbl_regression(regression_4,

label = list(x1sex ~ "Sex",

x1ses ~ "Socio-Economic Status",

x1paredu ~ "Parental Education")) |>

add_significance_stars(hide_ci = FALSE, hide_p = FALSE) |>

add_glance_source_note(include = c(r.squared, nobs)) |>

modify_column_unhide(std.error)| Characteristic | Beta1 | SE | 95% CI | p-value |

|---|---|---|---|---|

| Sex | ||||

| 1 | — | — | — | |

| 2 | -0.04 | 0.142 | -0.32, 0.24 | 0.8 |

| Socio-Economic Status | 3.8*** | 0.163 | 3.5, 4.1 | <0.001 |

| Parental Education | ||||

| 1 | — | — | — | |

| 2 | -0.05 | 0.333 | -0.70, 0.61 | 0.9 |

| 3 | -0.16 | 0.390 | -0.92, 0.61 | 0.7 |

| 4 | 2.0*** | 0.424 | 1.2, 2.9 | <0.001 |

| 5 | 2.6*** | 0.495 | 1.6, 3.5 | <0.001 |

| 7 | 2.7*** | 0.609 | 1.5, 3.9 | <0.001 |

| Abbreviations: CI = Confidence Interval, SE = Standard Error | ||||

| R² = 0.172; No. Obs. = 16,429 | ||||

| 1 *p<0.05; **p<0.01; ***p<0.001 | ||||

regression_robust <- lm_robust(regression_formula, data = data, se_type = "stata")

tbl_regression(regression_robust,

label = list(x1sex ~ "Sex",

x1ses ~ "Socio-Economic Status",

x1paredu ~ "Parental Education")) |>

add_significance_stars(hide_ci = FALSE, hide_p = FALSE) |>

add_glance_source_note(include = c(r.squared, nobs)) |>

modify_column_unhide(std.error)| Characteristic | Beta1 | SE | 95% CI | p-value |

|---|---|---|---|---|

| Sex | ||||

| 1 | — | — | — | |

| 2 | -0.04 | 0.142 | -0.32, 0.24 | 0.8 |

| Socio-Economic Status | 3.8*** | 0.165 | 3.5, 4.1 | <0.001 |

| Parental Education | ||||

| 1 | — | — | — | |

| 2 | -0.05 | 0.320 | -0.67, 0.58 | 0.9 |

| 3 | -0.16 | 0.377 | -0.89, 0.58 | 0.7 |

| 4 | 2.0*** | 0.417 | 1.2, 2.9 | <0.001 |

| 5 | 2.6*** | 0.489 | 1.6, 3.5 | <0.001 |

| 7 | 2.7*** | 0.621 | 1.5, 3.9 | <0.001 |

| Abbreviations: CI = Confidence Interval, SE = Standard Error | ||||

| R² = 0.172; No. Obs. = 16,429 | ||||

| 1 *p<0.05; **p<0.01; ***p<0.001 | ||||

Modeling Programatically with Loops

- Finally, we can also bring in content from Functions & Loops and fit regression models using loops

- This is kind of thing you might want to do if you are testing the same model on a set of outcomes

Quick Question

- Thinking back to that lesson, why might we want to go through the hassle of fitting regressions using loops? What are the advantages of using loops vs coding it all out separately?

- For example, we might be interested in modeling both a students math score and their parental education expectation

- We make a list containing our outcome variables (x1txmtscor and x1paredexpct)

- Use a

for()loop to loop through these outcomes, whichpaste()s i (which takes on the name of each outcome variable) into the formula and then runs the model

outcomes <- c("x1txmtscor", "x1paredexpct")

for(i in outcomes) {

print(i)

loop_formula <- formula(paste0(i, "~ x1sex + x1ses + x1paredu"))

loop_regression <- lm(loop_formula, data = data)

tbl_regression(loop_regression,

label = list(x1sex ~ "Sex",

x1ses ~ "Socio-Economic Status",

x1paredu ~ "Parental Education")) |>

add_significance_stars(hide_ci = FALSE, hide_p = FALSE) |>

add_glance_source_note(include = c(r.squared, nobs)) |>

modify_column_unhide(std.error) |>

print()

}| Characteristic | Beta1 | SE | 95% CI | p-value |

|---|---|---|---|---|

| Sex |

|

|

|

|

| 1 | — | — | — |

|

| 2 | -0.04 | 0.142 | -0.32, 0.24 | 0.8 |

| Socio-Economic Status | 3.8*** | 0.163 | 3.5, 4.1 | <0.001 |

| Parental Education |

|

|

|

|

| 1 | — | — | — |

|

| 2 | -0.05 | 0.333 | -0.70, 0.61 | 0.9 |

| 3 | -0.16 | 0.390 | -0.92, 0.61 | 0.7 |

| 4 | 2.0*** | 0.424 | 1.2, 2.9 | <0.001 |

| 5 | 2.6*** | 0.495 | 1.6, 3.5 | <0.001 |

| 7 | 2.7*** | 0.609 | 1.5, 3.9 | <0.001 |

| Abbreviations: CI = Confidence Interval, SE = Standard Error | ||||

| R² = 0.172; No. Obs. = 16,429 | ||||

| 1 p<0.05; p<0.01; p<0.001 | ||||

| Characteristic | Beta1 | SE | 95% CI | p-value |

|---|---|---|---|---|

| Sex |

|

|

|

|

| 1 | — | — | — |

|

| 2 | 0.47*** | 0.040 | 0.39, 0.55 | <0.001 |

| Socio-Economic Status | 0.28*** | 0.046 | 0.19, 0.38 | <0.001 |

| Parental Education |

|

|

|

|

| 1 | — | — | — |

|

| 2 | -0.39*** | 0.094 | -0.58, -0.21 | <0.001 |

| 3 | -0.28* | 0.110 | -0.50, -0.07 | 0.010 |

| 4 | -0.08 | 0.119 | -0.31, 0.16 | 0.5 |

| 5 | 0.29* | 0.139 | 0.02, 0.56 | 0.037 |

| 7 | 0.85*** | 0.172 | 0.52, 1.2 | <0.001 |

| Abbreviations: CI = Confidence Interval, SE = Standard Error | ||||

| R² = 0.049; No. Obs. = 16,429 | ||||

| 1 p<0.05; p<0.01; p<0.001 | ||||

Use the hsls_small.csv data set from the lesson answer the following questions

- You will need to use variables on math scores, students’ poverty level, postsecondary attendance, sex, family income, parental education

Hint: You’re going to need the code book to identify the necessary variables

Question One

a) Conduct a t-test to compare math scores between students below 185% of the federal poverty line and those above it. Is there a statistically significant difference?

b) Make a plot to visualize the difference.

Question Two

a) Fit a logistic regression predicting postsecondary attendance using math score, sex, family income, and parental education.

Hint: Logistic regression is what you want to use when your outcome variable is binary (0 or 1) instead of a continuous variable. You might want to Google to find out what function you need for fitting a logistic regression.

b) Create a nicely formatted output table using stargazer or gtsummary for the model from 2a. What are some significant predictors based on your model results?

Submission

Once complete turn in the .qmd file (it must render/run) and the rendered PDF to Canvas by the due date (usually Tuesday 12:00pm following the lesson). Assignments will be graded before next lesson on Wednesday in line with the grading policy outlined in the syllabus.

Solution

## -----------------------------------------------------------------------------

##

##' [PROJ: EDH 7916]

##' [FILE: Modeling Basics Solution]

##' [INIT: January 12 2025]

##' [AUTH: Jue Wu]

##

## -----------------------------------------------------------------------------

setwd(this.path::here())

## ---------------------------

##' [Libraries]

## ---------------------------

library(tidyverse)

library(stargazer)

library(gtsummary)

## ---------------------------

##' [Q1]

## ---------------------------

data <- read_csv("data/hsls-small.csv") |>

select(stu_id, x1sex, x1txmtscor, x1paredu, x1poverty185, x4evratndclg, x1famincome)

data <- data |>

filter(! if_any(.cols = everything(),

.fns = ~ . %in% c(-8, -9)))

data <- data |>

mutate(across(.cols = c(stu_id, x1sex, x1paredu, x1poverty185, x1famincome),

.fns = ~ factor(.)))

# 1a

t.test(x1txmtscor ~ x1poverty185, data = data)

# 1b

ggplot(data, aes(x = x1poverty185, y = x1txmtscor, fill = x1poverty185)) +

geom_boxplot() +

labs(x = "Poverty Status",

y = "Math Score",

fill = "Poverty Level") +

theme_minimal() +

scale_fill_discrete(labels = c("Below 185%", "Above 185%"))

## ---------------------------

##' [Q2]

## ---------------------------

# 2a

logit_reg <- glm(x4evratndclg ~ x1txmtscor + x1sex + x1famincome + x1paredu, data = data)

summary(logit_reg)

# 2b

# stargazer

stargazer(logit_reg, type = "html")

# gtsummary

tbl_regression(logit_reg,

label = list(x1txmtscor ~ "Math Score",

x1sex ~ "Sex",

x1famincome ~ "Family Income",

x1paredu ~ "Parental Education")) |>

add_significance_stars(hide_ci = FALSE, hide_p = FALSE) |>

modify_column_unhide(std.error)

## -----------------------------------------------------------------------------

##' *END SCRIPT*

## -----------------------------------------------------------------------------